::: {.cell .border-box-sizing .text_cell .rendered} ::: {.prompt .input_prompt} :::

::: {.inner_cell} ::: {.text_cell_render .border-box-sizing .rendered_html} Joining many DataFrames at once with Reduce ===========================================

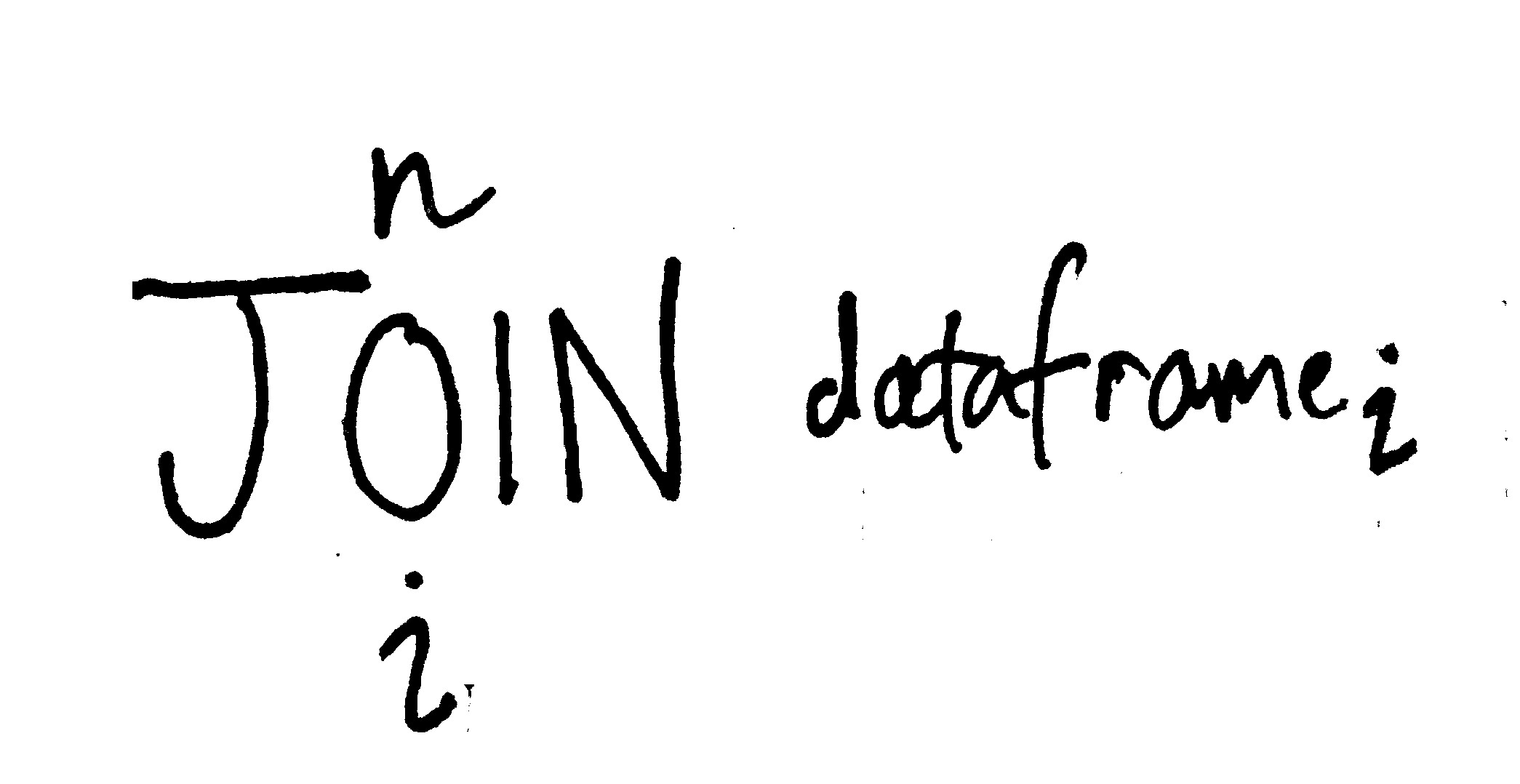

In my last project I wanted to compare many different Gender Inequality Indexes at once, including the one I had just come up with, called "WIGI". The problem was that the rank and score data for each index was in a separate DataFrame. I need to perform repeated SQL-style joins. In this case I actually only had to join 5 dataframes, for 5 indices. But later, in helping my partner with her research, she came across the same problem needed to join more than 100. In my mind I saw that we wanted to accomplish this n-ary join. Mathematically I wanted this type of operation, which I couldn't find in pandas.

The answer I enjoyed implementing, perhaps because I saw it as this type of repeated operation, is the reduce of functional programming.

Ok, say we have these two data sets: ::: ::: :::

::: {.cell .border-box-sizing .code_cell .rendered} ::: {.input} ::: {.prompt .input_prompt} In [5]: :::

::: {.inner_cell} ::: {.input_area} ::: {.highlight} wigi ::: ::: ::: :::

::: {.output_wrapper} ::: {.output} ::: {.output_area} ::: {.prompt .output_prompt} Out[5]: :::

::: {.output_html .rendered_html .output_subarea .output_pyout} ::: {style="max-height: 1000px; max-width: 1500px; overflow: auto;"}

| ::: ::: ::: ::: ::: ::: | Rank | Score |

|---|---|---|

| Economy | ||

| Republic of China | 1 | 0.356890 |

| Kingdom of Denmark | 2 | 0.347826 |

| Sweden | 3 | 0.345212 |

| South Korea | 4 | 0.343662 |

| Hong Kong | 5 | 0.342857 |

::: {.cell .border-box-sizing .code_cell .rendered} ::: {.input} ::: {.prompt .input_prompt} In [6]: :::

::: {.inner_cell} ::: {.input_area} ::: {.highlight} world_economic_forum ::: ::: ::: :::

::: {.output_wrapper} ::: {.output} ::: {.output_area} ::: {.prompt .output_prompt} Out[6]: :::

::: {.output_html .rendered_html .output_subarea .output_pyout} ::: {style="max-height: 1000px; max-width: 1500px; overflow: auto;"}

| ::: ::: ::: ::: ::: ::: | Rank | Score |

|---|---|---|

| Economy | ||

| Iceland | 1 | 0.8594 |

| Finland | 2 | 0.8453 |

| Norway | 3 | 0.8374 |

| Sweden | 4 | 0.8165 |

| Denmark | 5 | 0.8025 |

::: {.cell .border-box-sizing .text_cell .rendered} ::: {.prompt .input_prompt} :::

::: {.inner_cell} ::: {.text_cell_render .border-box-sizing .rendered_html} We'd probably join them like this: ::: ::: :::

::: {.cell .border-box-sizing .code_cell .rendered} ::: {.input} ::: {.prompt .input_prompt} In [7]: :::

::: {.inner_cell} ::: {.input_area} ::: {.highlight} wigi.join(world_economic_forum, how='outer', lsuffix='_wigi', rsuffix='_wef') ::: ::: ::: :::

::: {.output_wrapper} ::: {.output} ::: {.output_area} ::: {.prompt .output_prompt} Out[7]: :::

::: {.output_html .rendered_html .output_subarea .output_pyout} ::: {style="max-height: 1000px; max-width: 1500px; overflow: auto;"}

| ::: ::: ::: ::: ::: ::: | Rank_wigi | Score_wigi | Rank_wef | Score_wef |

|---|---|---|---|---|

| Economy | ||||

| Denmark | NaN | NaN | 5 | 0.8025 |

| Finland | NaN | NaN | 2 | 0.8453 |

| Hong Kong | 5 | 0.342857 | NaN | NaN |

| Iceland | NaN | NaN | 1 | 0.8594 |

| Kingdom of Denmark | 2 | 0.347826 | NaN | NaN |

| Norway | NaN | NaN | 3 | 0.8374 |

| Republic of China | 1 | 0.356890 | NaN | NaN |

| South Korea | 4 | 0.343662 | NaN | NaN |

| Sweden | 3 | 0.345212 | 4 | 0.8165 |

::: {.cell .border-box-sizing .text_cell .rendered} ::: {.prompt .input_prompt} :::

::: {.inner_cell} ::: {.text_cell_render .border-box-sizing .rendered_html} But we want to generalize. Notice here we also inject the name of the DataFrame into the column names to avoid "suffix-hell" as I would like to term it. ::: ::: :::

::: {.cell .border-box-sizing .code_cell .rendered} ::: {.input} ::: {.prompt .input_prompt} In [1]: :::

::: {.inner_cell} ::: {.input_area} ::: {.highlight} import pandas

def make_df(filename):

df = pandas.DataFrame.from_csv(filename)

name = filename.split('.')[0]

df.columns = map(lambda col: '{}_{}'.format(str(col), name), df.columns)

return df

filenames = !ls

dfs = [make_df(filename) for filename in filenames]

::: ::: ::: ::: :::

::: {.cell .border-box-sizing .text_cell .rendered} ::: {.prompt .input_prompt} :::

::: {.inner_cell} ::: {.text_cell_render .border-box-sizing .rendered_html} Now here's the reducer. I actually end up wanting an inner join in the end, but the type of join is not important to illustrate the fact.

Here we join 5 DataFrames at once. ::: ::: :::

::: {.cell .border-box-sizing .code_cell .rendered} ::: {.input} ::: {.prompt .input_prompt} In [2]: :::

::: {.inner_cell} ::: {.input_area} ::: {.highlight} def join_dfs(ldf, rdf): return ldf.join(rdf, how='inner')

final_df = reduce(join_dfs, dfs) #that's the magic

final_df.head()

::: ::: ::: :::

::: {.output_wrapper} ::: {.output} ::: {.output_area} ::: {.prompt .output_prompt} Out[2]: :::

::: {.output_html .rendered_html .output_subarea .output_pyout} ::: {style="max-height: 1000px; max-width: 1500px; overflow: auto;"}

| ::: ::: ::: ::: ::: ::: | Score_gdi | Rank_gdi | Score_gei | Rank_gei | Rank_sigi | Score_sigi | Rank_wdf | Score_wdf | Rank_wef | Score_wef |

|---|---|---|---|---|---|---|---|---|---|---|

| Economy | ||||||||||

| Nicaragua | 0.912 | 102 | 74 | 37 | 53 | 0.8405 | 13 | 0.272727 | 6 | 0.7894 |

| Rwanda | 0.950 | 80 | 77 | 19 | 43 | 0.8661 | 134 | 0.096154 | 7 | 0.7854 |

| Philippines | 0.989 | 17 | 76 | 26 | 57 | 0.8235 | 6 | 0.322785 | 9 | 0.7814 |

| Belgium | 0.977 | 38 | 79 | 12 | 1 | 0.9984 | 73 | 0.163734 | 10 | 0.7809 |

| Latvia | 1.033 | 52 | 77 | 19 | 24 | 0.9489 | 82 | 0.157623 | 15 | 0.7691 |

::: {.cell .border-box-sizing .text_cell .rendered} ::: {.prompt .input_prompt} :::

::: {.inner_cell}

::: {.text_cell_render .border-box-sizing .rendered_html}

I really like the elegance of this solution. I admit there may be other ways to go about it with pandas only, and I understand the R mentality of "no for loops". Still this is precisely why I like pandas in python - you still get the freedom to play as you wish if it makes more sense to you.

:::

:::

:::